Having trouble getting your WordPress website crawled or indexed in search? When you log in to Google’s Search Console and request indexing are you being met with all sorts of error messages about robots.txt and/or ‘noindex’ meta tags? If so, you’ve come to the right place. In this blog post I’m going to walk you through these two common issues and how to resolve them.

Troubleshooting Index & Crawl Issues: Where to Start

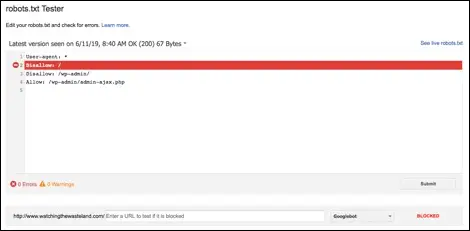

First thing first, let’s try to narrow down the problem. To do that, log in to Google Search Console. Then copy and paste your website’s homepage URL in the robots.txt tester and hit submit. (For now, this tool only exists in the old version of Google Search Console.) If it’s “BLOCKED,” see Issue #1, if it’s “ALLOWED,” see Issue #2 below.

Issue #1: Domain or URL Blocked by Robots.txt

If the disallow line lights up red and you see the word “BLOCKED” appear on the box in the lower right-hand corner like in the screenshot below, the robots.txt file is the culprit. To undo this, you’re going to need to be able to access and edit* the robots.txt file for your website.

*If you are not the person that typically plays around in the backend of your website, then I strongly encourage you to reach out to your website developer, IT person, or whoever it is that handles website maintenance.

Now in the example above, there’s two things going on, one good and one bad based on our current predicament. This URL, /wp-admin/, is purposefully disallowed as we do not wish for the backend portion of our website to be crawled by any of the search engines. That should stay.

However, the Disallow: / line is where the trouble lies. That line, or should I say forward slash, blocks all search engines from crawling your website…like, all of it. So in order to unblock robots.txt, that portion needs to be removed from the robots.txt file.

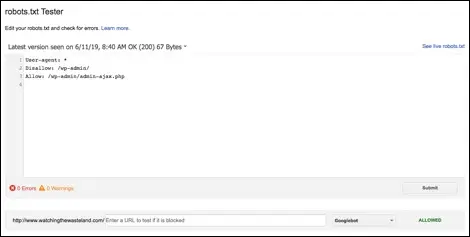

It literally only takes one character to throw a monkey wrench into things. Once the necessary edit has been made to the file, drop the homepage URL back in the robots.txt tester to check if your site is now welcoming search engines. If things are hunky dory, the box on the bottom right will say, “ALLOWED” in green and search engines can now begin to crawl the site.

That fix should successfully unblock robots.txt sitewide (or at least for any page not specifically designated as disallow like with /wp-admin/ URL above), but feel free to copy and paste a couple of additional site pages in to the tester tool just to ensure that the issue has been resolved for more than just your homepage.

If you’d like to learn more about this particular brand of bots, check out Yoast.com’s Ultimate Guide to Robots.txt.

Issue #2: Remove ‘noindex’ Meta Tag in WordPress

If the above issue is not what’s plaguing your website, as in everything’s coming up “ALLOWED” (as it should be), there’s another common reason your WordPress website may not be showing up in search – the pesky ‘noindex’ tag.

To see if this is the case, toggle back to the new version of Search Console and paste any URL in the “Inspect any URL in…” search field at the top of the page and hit enter.

If the URL Inspection report displays the following message: No: ‘noindex’ detected in ‘robots’ meta tag, it’s a single checkbox setting in the backend of WordPress that’s causing all this ruckus.

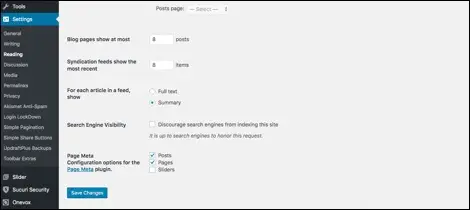

To unblock search engines from indexing your website, do the following:

- Log in to WordPress

- Go to Settings → Reading

- Scroll down the page to where it says “Search Engine Visibility”

- Uncheck the box next to “Discourage search engines from indexing this site”

- Hit the “Save Changes” button below

If you’re using the Yoast SEO – WordPress plugin, also check the blog post settings to ensure that they are similarly set to allow indexing.

Once that is complete, go back to Search Console and resubmit the URL you tried earlier. If your settings are configured properly, everything should be singing a different tune. Now when you submit a URL, the URL inspection report should be void of all warnings and error messages, at least ones related to indexing and crawlability, and you’ll be able to “Request Indexing,” which I imagine was your goal all along.

I hope that helps, but if the above steps did not provide a solution to your current quandary, I recommend checking out this Google Webmasters support article about ‘noindex’ to learn more.

An obvious, yet crucial part of SEO, is getting your site to appear in search results. You need to ensure that your website can be crawled and indexed, which means removing the ‘noindex’ tag and unblocking robots.txt from the public parts of your site. These settings are essential for success, have PCG Digital monitor Search Console warnings or wonky behavior to troubleshoot these issues with the advice and resources provided above.

Learn More From the SEO Team at PCG Digital

We hope these instructions on unblocking robot.txt and removing ‘noindex’ on your WordPress pages help create more visibility for your dealership website. As a part of our services, our PCG Digital SEO team specializes in hyper-localized marketing to help your dealership get discovered by local shoppers. Contact us to receive a free digital audit of your site and learn how you can improve your automotive marketing strategies